A few months ago I wrote about Daniel Mintseris’ Lynda.com course on Ableton Live. I’ve finally had a chance to implement most of his advice, but I had one significant issue to overcome before I could fully port our set from Arrangement View to Session View.

In Arrangement View, we had the entire set laid out start to finish. In Session View, with each song setup as one scene, I needed a way to automatically fire the subsequent scene. I may not do this forever, but this would allow me to complete the migration without disrupting our current show strategy.

Firing a scene automatically seemed like a perfect job for Max4Live. In fact, Daniel pointed me toward Isotonik Studio’s Follow device to accomplish this, but I couldn’t get my head around it and found the documentation lacking. This seemed like a good opportunity to explore the M4L API, too.

@jeffvautin Jeff, @IsotonikStudios has a #max4live device called Follow, which allows Follow Actions for Scenes. give it a try.

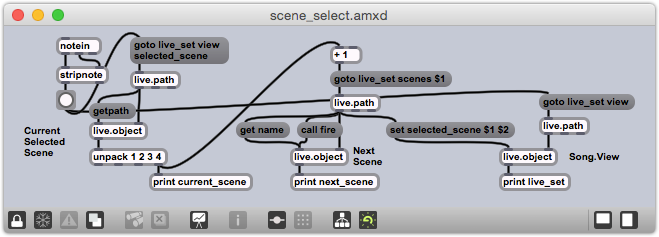

— Daniel Mintseris (@mindlessinertia) September 4, 2014This is what I ended up building. It may not be pretty (yet), but it’s getting the job done, and I thought I’d share how it works:

The high level process is:

- Figure out which scene is currently selected.

- Get the ID of the next scene.

- Send a message to the view to select that next scene.

As always, the devil is in the details:

- The device monitors incoming MIDI notes via

notein. stripnoteremoves the note-off messages, so only the note-on messages are sent to button.- The button is there to provide a visual cue when the device fires, but also to allow for simple debugging. It converts the note-on messages into simple bangs.

- The bang fires a

goto live_set view selected_scenemessage to alive.pathobject, which then generates the ID of the currently selected scene at its left output. - A

live.objectreceives the ID of the selected scene, and then immediately receives agetpathmessage. This message produces the canonical path of the currently selected scene. - The fourth argument of the path is the number of the currently selected scene; the

unpackobject isolates this number. - The current scene number is incremented and passed into the

goto live_set scenes $1message, which is then passed to a secondlive.pathobject to get the unique ID of the next scene. - Now that we’ve found the ID of the next scene, we can set it with the

set selected_scene $1 $2message. This is sent to anotherlive.objectthat represents the Live View.

As it currently stands, any MIDI note-on will fire the action. I plan to extend the behavior to be able to jump to specific scenes, and I’ll use the note number as the scene number. I believe I’ll use note 0 to represent the ‘next scene’ action, so that’s what I’m using for this behavior in our current set.