Band of Horses, NOS Alive.

Band of Horses, NOS Alive.

Hot doge.

Apple provides a set of audio tools that you can use when you’re mastering music. In particular, the afclip utility is a fantastic analysis tool. You can use to make sure your tracks have enough headroom for a clean encoding in the iTunes Plus format.

To use afclip, first download it from Apple. Run the installer1. Then, open up a terminal window and type afclip FILENAME, where you replace FILENAME with the name of your file. If you don’t want to bother typing out the filename, you can just drag the file onto the terminal window.

When you run the utility, hopefully you’ll just see some text that indicates everything is fine with your file:

afclip : "01_Room_5.17.16.wav" 2 ch, 44100 Hz, 'lpcm' (0x0000000C) 16-bit little-endian signed integer

-- no samples clipped --

But you may see more detail:

afclip : "06_Wall_5.17.16.wav" 2 ch, 44100 Hz, 'lpcm' (0x0000000C) 16-bit little-endian signed integer

CLIPPED SAMPLES:

SECONDS SAMPLE CHAN VALUE DECIBELS

57.385431 2530697.50 1 -1.006987 0.060476

65.400034 2884141.50 1 -1.001764 0.015309

195.992483 8643268.50 2 1.009248 0.079958

198.687313 8762110.50 1 1.005957 0.051587

214.678878 9467338.50 1 1.002328 0.020199

222.696179 9820901.50 1 1.001868 0.016213

234.043322 10321310.50 1 1.001779 0.015437

total clipped samples Left on-sample: 0 inter-sample: 6

total clipped samples Right on-sample: 0 inter-sample: 1

This indicates that this song would clip in seven places when played back. Interestingly, all of the clips are identified as ‘inter-sample’. This means the waveform exceeds 0dBFS between the samples that are actually present in the file. Inter-sample peaks are often the result of using a non-oversampling brick-wall limiter, such as the Waves L1. These limiters ensure that every sample is below the threshold you set, but they don’t make any promises about the waveform between samples.

In this case, we can fix the issue by just attenuating the track by 0.08dB, which should be inaudible. It could be much worse — in theory, there’s no limit to how large your inter-sample peaks can be. In practice, they’re often less than 2dB.

Inter-sample peaks matter for (at least) two reasons:

When the tracks are played back through a digital-to-analog converter (DAC), the analog signal may clip if the DAC wasn’t designed to provide headroom for inter-sample peaks. This is less of a problem on expensive playback equipment.

Inter-sample peaks can cause distortion when the tracks are being encoded to lossy formats such as MP3 or AAC. Since this is the main way people are getting their music these days, it’s really important to check for this!

Ironically, the installer fails the Gatekeeper check because it is “from an unidentified developer”. ↩

#mastering

#streethockey

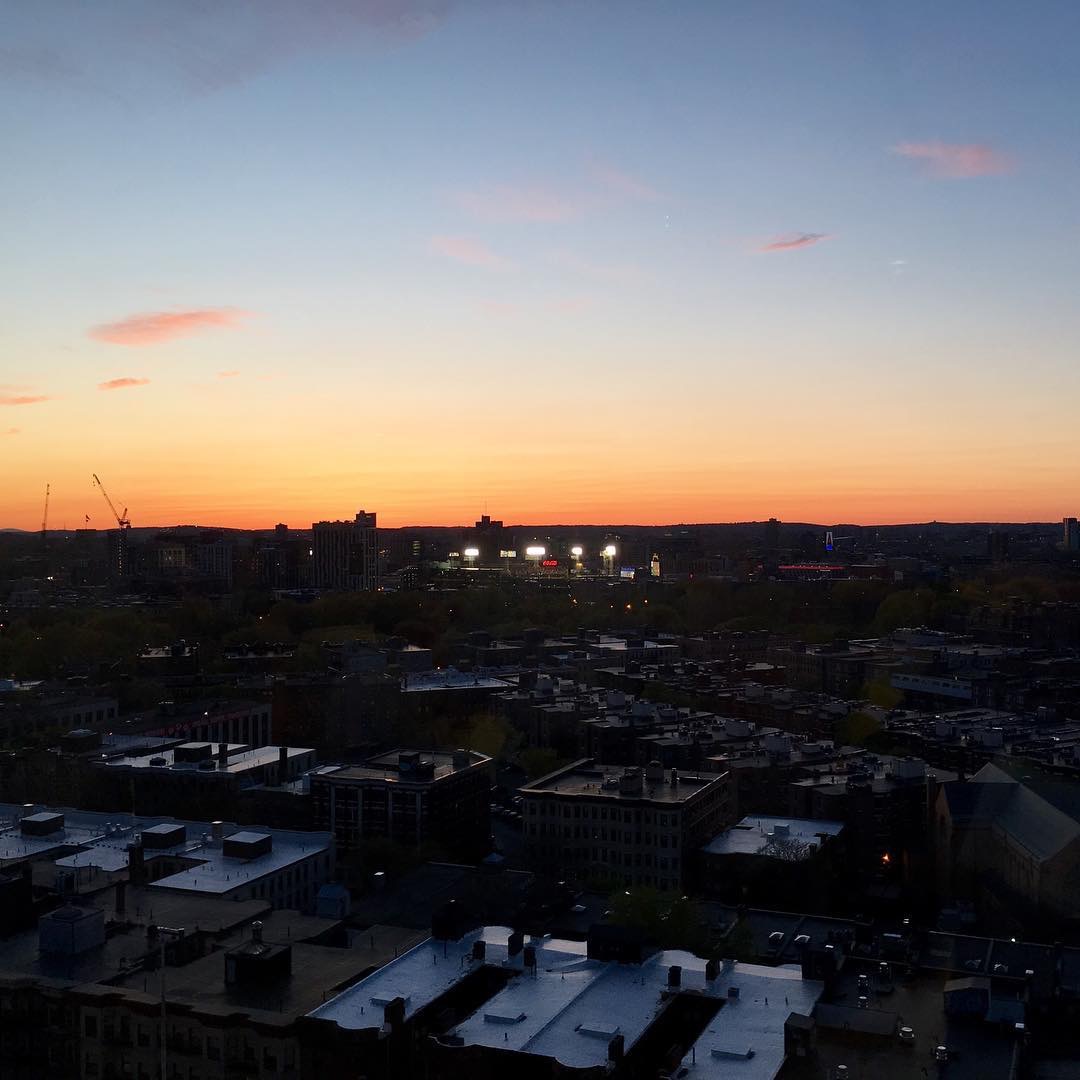

#sunset #fenway

I’ve shared an example iOS project on Github that demonstrates how to use the audioProcessingTap property on AVPlayer to process music in the iPod Music Library. I cribbed liberally from Chris’ Coding Blog, NVDSP, and the Learning Core Audio Book.

Chris’ Coding Blog shows how to set up the audioProcessingTap with an MTAudioProcessingTap struct, and in his example he uses the Accelerate framework to apply a volume gain:

#define LAKE_LEFT_CHANNEL (0)

#define LAKE_RIGHT_CHANNEL (1)

void process(MTAudioProcessingTapRef tap, CMItemCount numberFrames,

MTAudioProcessingTapFlags flags, AudioBufferList *bufferListInOut,

CMItemCount *numberFramesOut, MTAudioProcessingTapFlags *flagsOut)

{

OSStatus err = MTAudioProcessingTapGetSourceAudio(tap, numberFrames, bufferListInOut,

flagsOut, NULL, numberFramesOut);

if (err) NSLog(@"Error from GetSourceAudio: %ld", err);

LAKEViewController *self = (__bridge LAKEViewController *) MTAudioProcessingTapGetStorage(tap);

float scalar = self.slider.value;

vDSP_vsmul(bufferListInOut->mBuffers[LAKE_RIGHT_CHANNEL].mData, 1, &scalar, bufferListInOut->mBuffers[LAKE_RIGHT_CHANNEL].mData, 1, bufferListInOut->mBuffers[LAKE_RIGHT_CHANNEL].mDataByteSize / sizeof(float));

vDSP_vsmul(bufferListInOut->mBuffers[LAKE_LEFT_CHANNEL].mData, 1, &scalar, bufferListInOut->mBuffers[LAKE_LEFT_CHANNEL].mData, 1, bufferListInOut->mBuffers[LAKE_LEFT_CHANNEL].mDataByteSize / sizeof(float));

}

The NVDSP class implementation in NVDSP shows an example of using the vDSP_deq22() routine to filter using a biquad:

- (void) filterContiguousData: (float *)data numFrames:(UInt32)numFrames channel:(UInt32)channel {

// Provide buffer for processing

float tInputBuffer[numFrames + 2];

float tOutputBuffer[numFrames + 2];

// Copy the data

memcpy(tInputBuffer, gInputKeepBuffer[channel], 2 * sizeof(float));

memcpy(tOutputBuffer, gOutputKeepBuffer[channel], 2 * sizeof(float));

memcpy(&(tInputBuffer[2]), data, numFrames * sizeof(float));

// Do the processing

vDSP_deq22(tInputBuffer, 1, coefficients, tOutputBuffer, 1, numFrames);

// Copy the data

memcpy(data, tOutputBuffer + 2, numFrames * sizeof(float));

memcpy(gInputKeepBuffer[channel], &(tInputBuffer[numFrames]), 2 * sizeof(float));

memcpy(gOutputKeepBuffer[channel], &(tOutputBuffer[numFrames]), 2 * sizeof(float));

}

You’ll also see the CheckError() function the Learning Core Audio Book.

I pieced these together to implement a variable lowpass filter on music in the iPod Music Library. It wasn’t too complicated, though I’m sure there are problems with this implementation. Feel free to make use of this code, and I’ll definitely accept pull requests if anyone finds this useful.

Here’s the header for ProcessedAudioPlayer.h:

#import <Foundation/Foundation.h>

@interface ProcessedAudioPlayer : NSObject

@property (strong, nonatomic) NSURL *assetURL;

@property (nonatomic) BOOL filterEnabled;

@property (nonatomic) float filterCornerFrequency;

@property (nonatomic) float volumeGain;

@end

And the body, ProcessedAudioPlayer.m:

#import "ProcessedAudioPlayer.h"

@import AVFoundation;

@import Accelerate;

#define CHANNEL_LEFT 0

#define CHANNEL_RIGHT 1

#define NUM_CHANNELS 2

#pragma mark - Struct

typedef struct FilterState {

float *gInputKeepBuffer[NUM_CHANNELS];

float *gOutputKeepBuffer[NUM_CHANNELS];

float coefficients[5];

float gain;

} FilterState;

#pragma mark - Audio Processing

static void CheckError(OSStatus error, const char *operation)

{

if (error == noErr) return;

char errorString[20];

// see if it appears to be a 4-char-code

*(UInt32 *)(errorString + 1) = CFSwapInt32HostToBig(error);

if (isprint(errorString[1]) && isprint(errorString[2]) && isprint(errorString[3]) && isprint(errorString[4])) {

errorString[0] = errorString[5] = '\'';

errorString[6] = '\0';

} else

// no, format it as an integer

sprintf(errorString, "%d", (int)error);

fprintf(stderr, "Error: %s (%s)\n", operation, errorString);

exit(1);

}

OSStatus BiquadFilter(float* inCoefficients,

float* ioInputBufferInitialValue,

float* ioOutputBufferInitialValue,

CMItemCount inNumberFrames,

void* ioBuffer) {

// Provide buffer for processing

float tInputBuffer[inNumberFrames + 2];

float tOutputBuffer[inNumberFrames + 2];

// Copy the two frames we stored into the start of the inputBuffer, filling the rest with the current buffer data

memcpy(tInputBuffer, ioInputBufferInitialValue, 2 * sizeof(float));

memcpy(tOutputBuffer, ioOutputBufferInitialValue, 2 * sizeof(float));

memcpy(&(tInputBuffer[2]), ioBuffer, inNumberFrames * sizeof(float));

// Do the filtering

vDSP_deq22(tInputBuffer, 1, inCoefficients, tOutputBuffer, 1, inNumberFrames);

// Copy the data

memcpy(ioBuffer, tOutputBuffer + 2, inNumberFrames * sizeof(float));

memcpy(ioInputBufferInitialValue, &(tInputBuffer[inNumberFrames]), 2 * sizeof(float));

memcpy(ioOutputBufferInitialValue, &(tOutputBuffer[inNumberFrames]), 2 * sizeof(float));

return noErr;

}

@interface ProcessedAudioPlayer () {

FilterState filterState;

}

@property (strong, nonatomic) AVPlayer *player;

@end

@implementation ProcessedAudioPlayer

#pragma mark - Lifecycle

- (instancetype)init {

self = [super init];

if (self) {

_filterEnabled = true;

_filterCornerFrequency = 1000.0;

// Setup FilterState struct

for (int i = 0; i < NUM_CHANNELS; i++) {

filterState.gInputKeepBuffer[i] = (float *)calloc(2, sizeof(float));

filterState.gOutputKeepBuffer[i] = (float *)calloc(2, sizeof(float));

}

[self updateFilterCoeffs];

filterState.gain = 0.5;

}

return self;

}

- (void)dealloc {

for (int i = 0; i < NUM_CHANNELS; i++) {

free(filterState.gInputKeepBuffer[i]);

free(filterState.gOutputKeepBuffer[i]);

}

}

#pragma mark - Setters/Getters

- (void)setVolumeGain:(float)volumeGain {

filterState.gain = volumeGain;

}

- (float)volumeGain {

return filterState.gain;

}

- (void)setFilterEnabled:(BOOL)filterEnabled {

if (_filterEnabled != filterEnabled) {

_filterEnabled = filterEnabled;

[self updateFilterCoeffs];

}

}

- (void)setFilterCornerFrequency:(float)filterCornerFrequency {

if (_filterCornerFrequency != filterCornerFrequency) {

_filterCornerFrequency = filterCornerFrequency;

[self updateFilterCoeffs];

}

}

- (void)setAssetURL:(NSURL *)assetURL {

if (_assetURL != assetURL) {

_assetURL = assetURL;

[self.player pause];

// Create the AVAsset

AVAsset *asset = [AVAsset assetWithURL:_assetURL];

assert(asset);

// Create the AVPlayerItem

AVPlayerItem *playerItem = [AVPlayerItem playerItemWithAsset:asset];

assert(playerItem);

assert([asset tracks]);

assert([[asset tracks] count]);

AVAssetTrack *audioTrack = [[asset tracks] objectAtIndex:0];

AVMutableAudioMixInputParameters *inputParams = [AVMutableAudioMixInputParameters audioMixInputParametersWithTrack:audioTrack];

// Create a processing tap for the input parameters

MTAudioProcessingTapCallbacks callbacks;

callbacks.version = kMTAudioProcessingTapCallbacksVersion_0;

callbacks.clientInfo = &filterState;

callbacks.init = init;

callbacks.prepare = prepare;

callbacks.process = process;

callbacks.unprepare = unprepare;

callbacks.finalize = finalize;

MTAudioProcessingTapRef tap;

// The create function makes a copy of our callbacks struct

OSStatus err = MTAudioProcessingTapCreate(kCFAllocatorDefault, &callbacks,

kMTAudioProcessingTapCreationFlag_PostEffects, &tap);

if (err || !tap) {

NSLog(@"Unable to create the Audio Processing Tap");

return;

}

assert(tap);

// Assign the tap to the input parameters

inputParams.audioTapProcessor = tap;

// Create a new AVAudioMix and assign it to our AVPlayerItem

AVMutableAudioMix *audioMix = [AVMutableAudioMix audioMix];

audioMix.inputParameters = @[inputParams];

playerItem.audioMix = audioMix;

self.player = [AVPlayer playerWithPlayerItem:playerItem];

assert(self.player);

[self.player play];

}

}

#pragma mark - Utilities

- (void)updateFilterCoeffs {

float a0, b0, b1, b2, a1, a2;

if (self.filterEnabled) {

float Fc = self.filterCornerFrequency;

float Q = 0.7071;

float samplingRate = 44100.0;

float omega, omegaS, omegaC, alpha;

omega = 2*M_PI*Fc/samplingRate;

omegaS = sin(omega);

omegaC = cos(omega);

alpha = omegaS / (2*Q);

a0 = 1 + alpha;

b0 = ((1 - omegaC)/2);

b1 = ((1 - omegaC));

b2 = ((1 - omegaC)/2);

a1 = (-2 * omegaC);

a2 = (1 - alpha);

} else {

a0 = 1.0;

b0 = 1.0;

b1 = 0.0;

b2 = 0.0;

a1 = 0.0;

a2 = 0.0;

}

filterState.coefficients[0] = b0/a0;

filterState.coefficients[1] = b1/a0;

filterState.coefficients[2] = b2/a0;

filterState.coefficients[3] = a1/a0;

filterState.coefficients[4] = a2/a0;

}

#pragma mark MTAudioProcessingTap Callbacks

void init(MTAudioProcessingTapRef tap, void *clientInfo, void **tapStorageOut)

{

NSLog(@"Initialising the Audio Tap Processor");

*tapStorageOut = clientInfo;

}

void finalize(MTAudioProcessingTapRef tap)

{

NSLog(@"Finalizing the Audio Tap Processor");

}

void prepare(MTAudioProcessingTapRef tap, CMItemCount maxFrames, const AudioStreamBasicDescription *processingFormat)

{

NSLog(@"Preparing the Audio Tap Processor");

UInt32 format4cc = CFSwapInt32HostToBig(processingFormat->mFormatID);

NSLog(@"Sample Rate: %f", processingFormat->mSampleRate);

NSLog(@"Channels: %u", (unsigned int)processingFormat->mChannelsPerFrame);

NSLog(@"Bits: %u", (unsigned int)processingFormat->mBitsPerChannel);

NSLog(@"BytesPerFrame: %u", (unsigned int)processingFormat->mBytesPerFrame);

NSLog(@"BytesPerPacket: %u", (unsigned int)processingFormat->mBytesPerPacket);

NSLog(@"FramesPerPacket: %u", (unsigned int)processingFormat->mFramesPerPacket);

NSLog(@"Format Flags: %d", (unsigned int)processingFormat->mFormatFlags);

NSLog(@"Format Flags: %4.4s", (char *)&format4cc);

// Looks like this is returning 44.1KHz LPCM @ 32 bit float, packed, non-interleaved

}

void process(MTAudioProcessingTapRef tap, CMItemCount numberFrames,

MTAudioProcessingTapFlags flags, AudioBufferList *bufferListInOut,

CMItemCount *numberFramesOut, MTAudioProcessingTapFlags *flagsOut)

{

// Alternatively, numberFrames ==

// UInt32 numFrames = bufferListInOut->mBuffers[LAKE_RIGHT_CHANNEL].mDataByteSize / sizeof(float);

CheckError(MTAudioProcessingTapGetSourceAudio(tap,

numberFrames,

bufferListInOut,

flagsOut,

NULL,

numberFramesOut), "GetSourceAudio failed");

FilterState *filterState = (FilterState *) MTAudioProcessingTapGetStorage(tap);

float scalar = filterState->gain;

vDSP_vsmul(bufferListInOut->mBuffers[CHANNEL_RIGHT].mData,

1,

&scalar,

bufferListInOut->mBuffers[CHANNEL_RIGHT].mData,

1,

numberFrames);

vDSP_vsmul(bufferListInOut->mBuffers[CHANNEL_LEFT].mData,

1,

&scalar,

bufferListInOut->mBuffers[CHANNEL_LEFT].mData,

1,

numberFrames);

CheckError(BiquadFilter(filterState->coefficients,

filterState->gInputKeepBuffer[1],//self.gInputKeepBuffer1,

filterState->gOutputKeepBuffer[1],//self.gOutputKeepBuffer1,

numberFrames,

bufferListInOut->mBuffers[CHANNEL_RIGHT].mData), "Couldn't process Right channel");

CheckError(BiquadFilter(filterState->coefficients,

filterState->gInputKeepBuffer[0],//self.gInputKeepBuffer0,

filterState->gOutputKeepBuffer[0],//self.gOutputKeepBuffer0,

numberFrames,

bufferListInOut->mBuffers[CHANNEL_LEFT].mData), "Couldn't process Left channel");

}

void unprepare(MTAudioProcessingTapRef tap)

{

NSLog(@"Unpreparing the Audio Tap Processor");

}

@end

New lens: Olympus 25mm f/1.8

I just watched James’ Live Pro Tools Playback Rig on Pro Tools Expert, mainly because I was curious to learn what advantages he found with Pro Tools over Ableton Live (which I’ve written about previously).

He uses four outputs from an Audient iD22 to drive:

He sets their gig up as a linear show, and effectively just presses play. He has memory markers he can use to jump around in the set, in case they decide to change something on the fly.

The biggest tips he offers are:

But what are the advantages over Ableton Live? He addresses this at the end of the video:

The thing that most people say is “Why don’t you use Ableton Live, Live?”, and there’s a really simple answer: I don’t know it as well as I know Pro Tools.

That seems completely reasonable, and I’ll be sticking with Ableton.